In a quest to harness the power of generative AI, Google is making waves in online shopping with its latest feature. Launching as part of a series of updates to Google Shopping, this innovation promises to transform how we browse for clothes online.

But what exactly is it, and how does it work? Let’s delve into the details of Google’s groundbreaking virtual try-on tool.

A Closer Look at Virtual Try-On

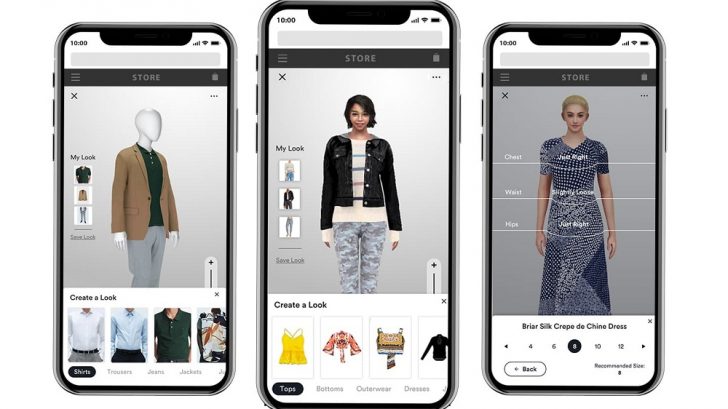

Imagine previewing how a piece of clothing would look on different models before purchasing. Well, that’s precisely what Google’s virtual try-on tool offers. It’s a game-changer in online shopping, and here’s how it works.

Powered by a cutting-edge diffusion-based model developed in-house by Google, this tool takes an image of clothing and performs some digital wizardry. It attempts to predict how the garment would drape, fold, cling, stretch, and even form wrinkles and shadows on a lineup of real-life fashion models, all in various poses.

The Magic of Diffusion Models

At the heart of this technology are diffusion models, which have proven their mettle in AI, including text-to-art generators like Stable Diffusion and DALL-E 2. These models are experts at gradually subtracting noise from an initial image, refining it step by step until it matches the desired target.

To train this model, Google used pairs of images, each featuring a person wearing a garment in two poses. For example, one image might show someone wearing a shirt while standing sideways, while another presents them facing forward. The process was repeated to ensure robustness with random image pairs involving various garments and people.

A Shopping Revolution

Today, U.S. shoppers using Google Shopping can virtually try women’s tops from renowned brands like Anthropologie, Everlane, H&M, and LOFT. Watch for the new “Try On” badge when you perform a Google Search. You’ll have to wait a little longer for men’s tops, as they are set to launch later in the year.

Boosting Confidence in Online Shopping

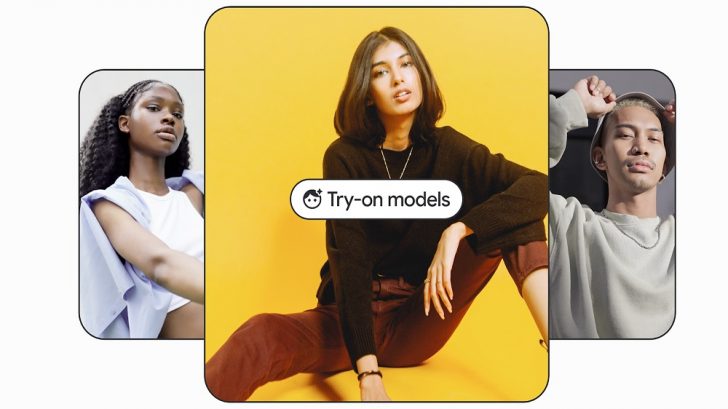

Lilian Rincon, senior director of consumer shopping products at Google, understands shoppers’ apprehensions when buying clothes online. According to a survey, 42% of online shoppers don’t feel represented by images of models, and 59% feel disappointed when an item doesn’t look as expected.

Rincon emphasizes that online shoppers should feel as confident as those trying in-store clothes. With this virtual try-on tool, Google aims to bridge the gap between the virtual and physical shopping experience.

Not Alone in the Game

Virtual try-on technology isn’t entirely new. Amazon, Adobe, Walmart, and even AI startup AIMIRR have been exploring similar concepts. Walmart, for instance, allows customers to use their photos to model clothing online, and AIMIRR uses real-time garment rendering technology to overlay clothing images on live videos.

Addressing Diversity

While generative AI continues to shape the fashion industry, it’s not without its challenges. Some models argue that it perpetuates long-standing inequalities in the industry. Often, independent contractor models face high agency commission fees and substantial business expenses. Moreover, there’s a lack of diversity, with a survey revealing that 78% of models in fashion adverts were white as of 2016.

Aware of these issues, Google used real models of diverse backgrounds, spanning various sizes, ethnicities, skin tones, body shapes, and hair types. While this is a step in the right direction, questions remain about how this feature might affect traditional photo shoot opportunities for models.

Enhanced Shopping Experience

As Google rolls out its virtual try-on feature, it’s also launching filtering options for clothing searches, all powered by AI and visual matching algorithms. These filters allow users to refine their searches across stores using criteria such as color, style, and pattern.

“In a physical store, associates can help you find other options based on what you’ve already tried on,” Rincon explains. “Now, you can get that extra hand when you shop for clothes online.”